PI4 Interns Nuoya Wang and Matthew Ellis spent this summer doing research under direction of Dr. Lebauer, a Research Scientist at Photosynthesis and Athmospheric Change Lab of UofI’s Energy Biosciences Institute. Below is their report.

This summer we focused on building a sugarcane canopy model and investigating into the direct sunlight photosynthesis effect.

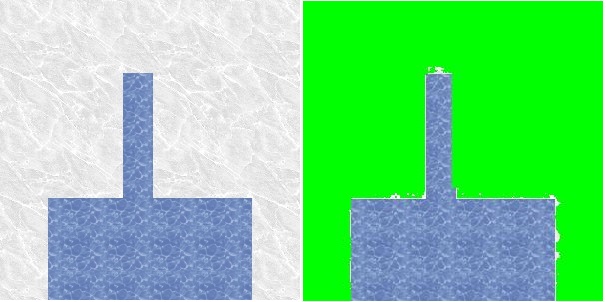

The first task is how to extract features from a 3D image of one canopy. The data format is triangular mesh, which means the leaves and stems are divided into little triangles. This gives us a clue that we may use neighborhood relationship to define the structure of the canopy.

Firstly, it is easy to find the height of the canopy. We just need to find the points with largest z-coordinate and smallest z-coordinate then the difference is the height. So the main task is to extract leaf length, leaf width and leaf curvature.

To obtain features of a leaf, we need to divide a plant into different leaves and analyze each leaf. Since the plant is represented by a triangular mesh, we can interpret it into a graph. That is, we find unique vertices and construct the adjacency matrix. For example, if there are 10,000 unique points, our adjacency matrix will be a 10,000×10,000 one and if 10, 100, 1000 form a triangle, then the (10, 100), (100, 10), (10, 1000), (1000, 10), (100, 1000), (1000, 100) elements in matrix will be marked as 1.

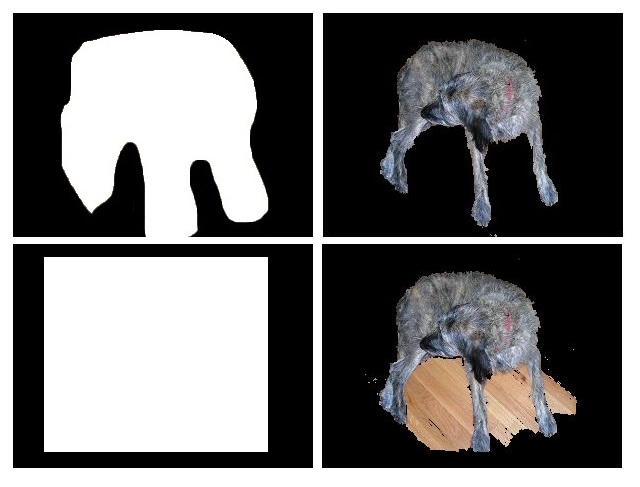

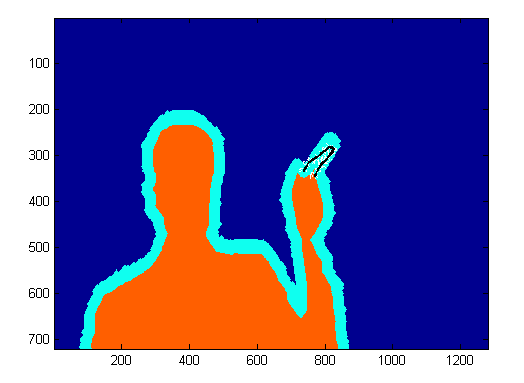

We can use connected components algorithm, say, Bellman-Ford algorithm to split the graph into isolated components. In our sample data, it is more direct to do this because each leaf is not really “attached” to the stem which means stem is isolated from leaves. If the data is from reconstruction of real world data, we need to clean the data first, for example, we can calculate the number of neighbors for each vertex and delete some vertices with few neighbors. In this way some attached points will be separated. In the following figure 1, we have marked different components by different colors.

Since we have got one leaf from previous step, we can start to analyze it. The algorithm we use is Minimum Spanning Tree Algorithm.

In our problem we choose “weight” as the Euclidean distance between different points. After applying minimum spanning tree algorithm some of the unimportant connections are ignored and result is in figure 2:

Left: Figure 1; Right: Figure 2.

It is clear in figure 2 that the path which connect 2 red points can be used to estimate leaf length. So we used shortest path algorithm to find the path and calculate the path length. The 2 red points are defined as the pair with longest Euclidean distance. Leaf width can also be calculated from this minimum spanning tree. My algorithm is checking all points on the shortest path which connects the 2 red points, find 2 closest neighbors and leaf width at that point is defined as the sum of distance from the 2 neighbors. Finally, we choose the largest width as the width for whole leaf. Once we have the Euclidean distance of the 2 red points and the length of path connecting them, we can easily find the leaf curvature.

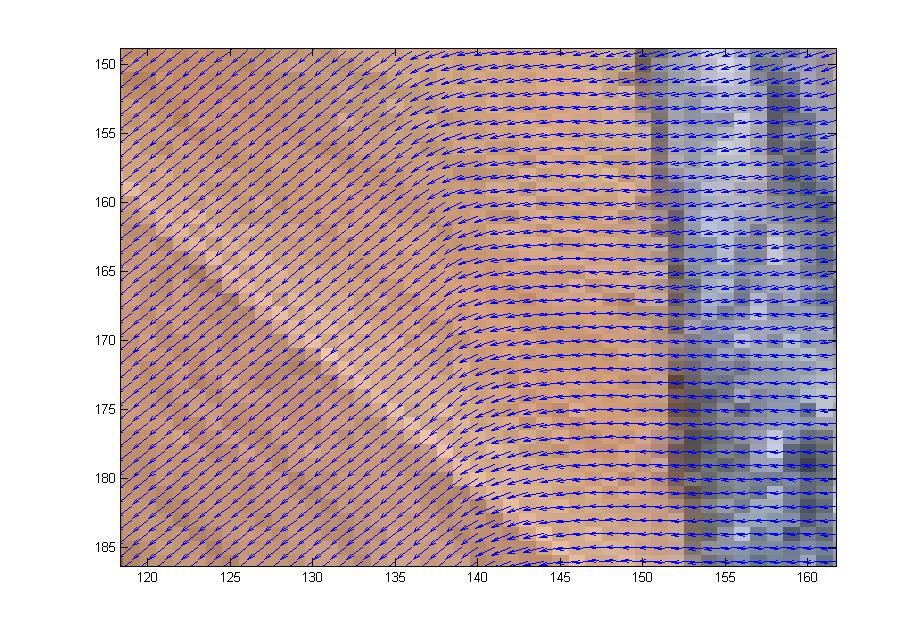

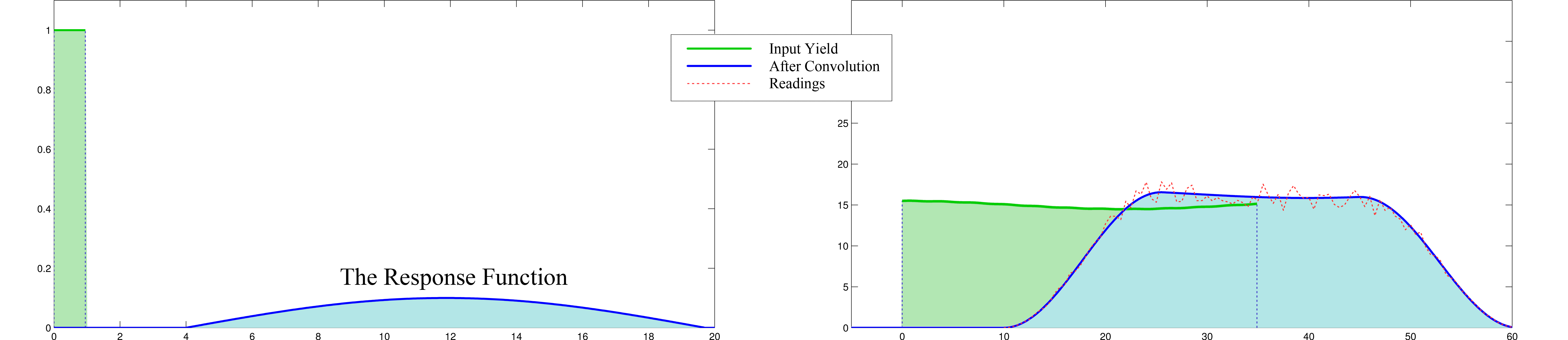

The second task in the summer project is to discuss the photosynthesis effect. We only considered the effect of direct sunlight. Our goal is to find the relationship between sunlit area ratio and height. The canopy model we used is still the triangular mesh model we have mentioned above.

In this part we focus on all vertices and introduce randomness into our algorithm. The basic principle for the algorithm is very simple: first we group all triangles’ vertices according to their heights. Second step is randomly choosing some points in one layer (that is, one group) to check if those points are shaded by higher level points or not. For example, if there are 1000 points in that group in total and we choose 200 of them. After calculation there are 100 points being shaded, then we assume there are 500 points being shaded in the original group. Using this simple sampling method, we can improve the efficiency of our algorithm by a lot.

Following are the results for direct sunlit ratios. In figure 3, x-axis is the height and y-axis is the ratio of shaded area. We tried several sunlight angles and got different results.

Figures 3 and 4 show the results. In figure 3, the azimuth angles for 3 pictures are 30, 60 and 90 degrees. Black lines are elevation=30 results, blue lines are for elevation=60 and red lines are for elevation=90. In figure 4, elevation angles for 3 pictures are 30, 60 and 90 degrees. Magneta lines are azimuth=0 results while black lines are azimuth=30 results, blue lines are for azimuth=60 and red lines are for azimuth =90.